How to Increase Website Conversions: An Actionable Playbook for SDR Leaders

Getting more people to convert on your website is all about one thing: methodically finding and removing friction for your ideal buyers. For a Sales Development Representative (SDR) leader, this isn't about vanity metrics; it's about turning your website into the team's best qualifier. It’s a blend of digging into buyer-intent data, smoothing out the path to a demo, and writing copy that guides high-value visitors to take the next step. The goal is to make their journey so smooth and obviously valuable that booking a meeting with your SDR feels like the most natural thing to do.

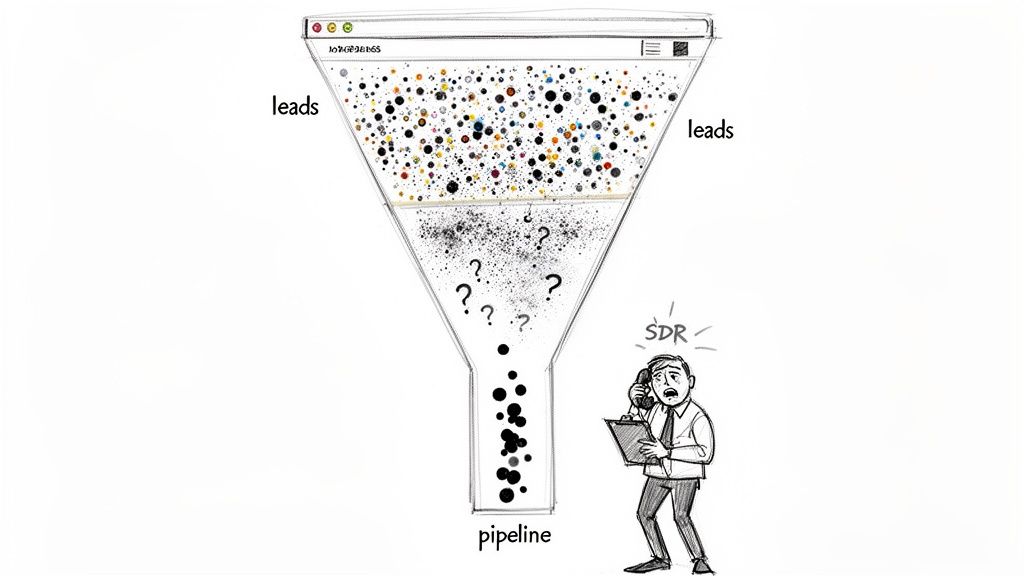

Why Your Website Funnel Is Failing Your SDRs

Let's be real for a second. Your sales development reps (SDRs) are probably drowning in a sea of low-quality "leads." While the marketing team pops champagne over a spike in form fills, your sales floor is telling a completely different story. It’s a story of dead-end calls, ignored emails, and prospects who have no idea who you are and no intention of buying.

This is the classic marketing-sales disconnect that grinds pipeline growth to a halt, leaving your SDRs to do the dirty work of sifting through garbage.

The problem starts with a flawed definition of "conversion." A traditional website funnel treats every action the same. A student downloading a top-of-funnel eBook gets logged with the same initial weight as a direct demo request from a VP at a target account. For an SDR, these two "leads" couldn't be more different, yet they often land in the queue with the same priority.

The result is painful and expensive. SDRs burn their most precious resource—time—chasing ghosts. They spend their days slogging through lists of contacts with zero buying intent, leading to frustration, burnout, and a lot of missed quotas. Your funnel isn't filtering for quality; it’s just collecting names in a spreadsheet and passing the qualification burden to your sales team.

The Volume Game vs. The Quality Signal

The old playbook was all about quantity. The thinking was, if you pour enough "leads" into the top, a predictable number of deals will magically pop out the bottom. For an SDR team, this "spray and pray" model is a recipe for inefficiency.

Think about the difference between these two leads from an SDR's perspective:

- The Volume Lead: Someone downloads a whitepaper. They could be a competitor, a student doing research, or someone just kicking tires. The lead lands in the SDR's queue with zero context, kicking off a generic, and likely ignored, outreach sequence. This is a cold call with a flimsy excuse.

- The Quality Signal: Someone from your ideal customer profile (ICP) hits your pricing page twice this week, then watches a 15-minute product demo. That’s not just a lead; it’s a flare going up, signaling real buying intent. For an SDR, this is a hot, contextualized lead that they can engage with a highly relevant message, dramatically increasing the chance of booking a meeting.

Your website needs to be an intelligent filter, not just a digital fishnet. It must learn to tell the difference between passive curiosity and active evaluation, so it can hand-deliver qualified opportunities to your SDRs.

The goal is to turn your website from a generator of noise into a source of clear, actionable signals. That's how you empower your SDRs to spend their time only on the accounts that are actually ready to talk.

From Vague Leads to Actionable Intelligence

When your website conversions aren't aligned with what SDRs actually need, you get a broken system. Marketing hits its MQL number, but the sales team misses its revenue target. Everyone loses.

This is exactly why a quality-first approach to conversion rate optimization (CRO) is so critical for SDR teams. By zeroing in on high-intent actions, you arm your SDRs with the context they need to start conversations that matter. Instead of asking "Are you the right person to talk to?", they can open with "I saw you were looking at our integration with Salesforce; I can show you exactly how that works."

This shift takes more than just tweaking a form field, though that's a good place to start. For some great ideas, check out these actionable conversion rate optimization tips for forms, which are often the last hurdle between you and a great lead.

Ultimately, tying marketing activities to real sales outcomes is the cornerstone of a modern go-to-market motion. This playbook will walk you through turning your site from a lead graveyard into a true pipeline-generating machine for your SDRs.

Build a Conversion Funnel on Trust and Social Proof

Let's be blunt. Vague promises of "unparalleled ROI" and generic marketing slogans just don't land with savvy B2B buyers anymore. A VP of RevOps has heard it all before, and their default setting is skepticism. This skepticism is what your SDRs have to overcome on every single call.

To cut through that noise, you have to stop making claims and start proving value. This is where trust and social proof become your SDR team's most valuable assets. Instead of you telling prospects your solution is great, you let their peers do the talking. It’s a fundamental shift that directly fuels your pipeline with warmer, more qualified leads for your SDRs, making their outreach more of a welcome follow-up than a cold interruption.

Moving Beyond the Logo Wall

The old way of showing social proof? The static "logo wall." It's a grid of impressive company logos sitting on a page, and while it's better than nothing, it's a passive approach. It says, "these companies trust us," but it completely misses the prospect's most important question: "So what? What's in it for me?"

A modern, high-conversion strategy embeds social proof right where decisions are actually being made. This isn't about a passive display; it's about making it an active, persuasive part of the user's journey. This approach pre-handles objections and builds credibility before your SDR even says hello.

Just look at the difference in these two scenarios from an SDR's point of view:

| The Old Way (Static Logo Wall) | The New Way (Contextual Proof) |

|---|---|

| A prospect browses your "Customers" page and sees a familiar logo. | A prospect is on your pricing page, and a testimonial from a peer in their industry appears right next to the "Request a Demo" button. |

| The SDR gets a generic "website inquiry" lead with zero context and has to start the conversation from scratch, building trust from the ground up. | The SDR gets a lead alert showing the prospect engaged with the pricing page and a specific case study, giving them an instant, powerful opening line: "I saw you were checking out how [Similar Company] solved [Problem]..." |

The difference is night and day. The first lead is cold. The second is pre-warmed with validation from a source they already trust, making your SDR's job infinitely easier and their conversation far more relevant.

Leveraging Real Stories and User Content

Authentic stories from real customers are your best sales tool, period. These narratives aren't just fluff—they are concrete evidence that your solution solves real-world problems. When you gather them and place them strategically, you build a powerful case for your product before an SDR even sends the first email.

The data absolutely backs this up. An analysis of over 1,200 websites showed that pages featuring User-Generated Content (UGC) have a 3.2% conversion rate. That rate jumps by another 3.8% when visitors simply scroll through it. But the real magic happens when users actually engage with that content—their likelihood of converting doubles, boosting rates by an incredible 102%.

Your goal is to make it impossible for a high-intent prospect to miss relevant social proof at their moment of decision. This isn't bragging; it's reassurance that helps your SDRs close for the meeting.

Here’s how you can put this into action right now to help your team:

- Case Studies: Turn customer wins into detailed stories. Highlight the specific pain points, the solution you provided, and—most importantly—the quantifiable results. Then, make them easy to find on your relevant feature pages. Your SDRs can use these as powerful follow-up assets.

- Testimonials and Quotes: Pull the most powerful one-liners from happy customers. Place them next to key CTAs, on landing pages, and even inside your demo request forms. This builds confidence at the exact moment a prospect might hesitate.

- Reviews and Ratings: Integrate reviews from third-party sites like G2 or Capterra. This adds a layer of unbiased credibility that you just can't create on your own, giving your SDRs third-party validation to reference in their outreach.

When you collect and deploy these assets, you're not just decorating your website. You're building a smarter funnel that filters for intent and validates interest on the fly. To get a better handle on gathering this kind of feedback, check out these powerful voice of customer examples.

This entire approach ensures that when a prospect finally raises their hand, they've already been convinced by people they trust. That’s how you give your SDRs the ultimate advantage: a warmer, more receptive audience.

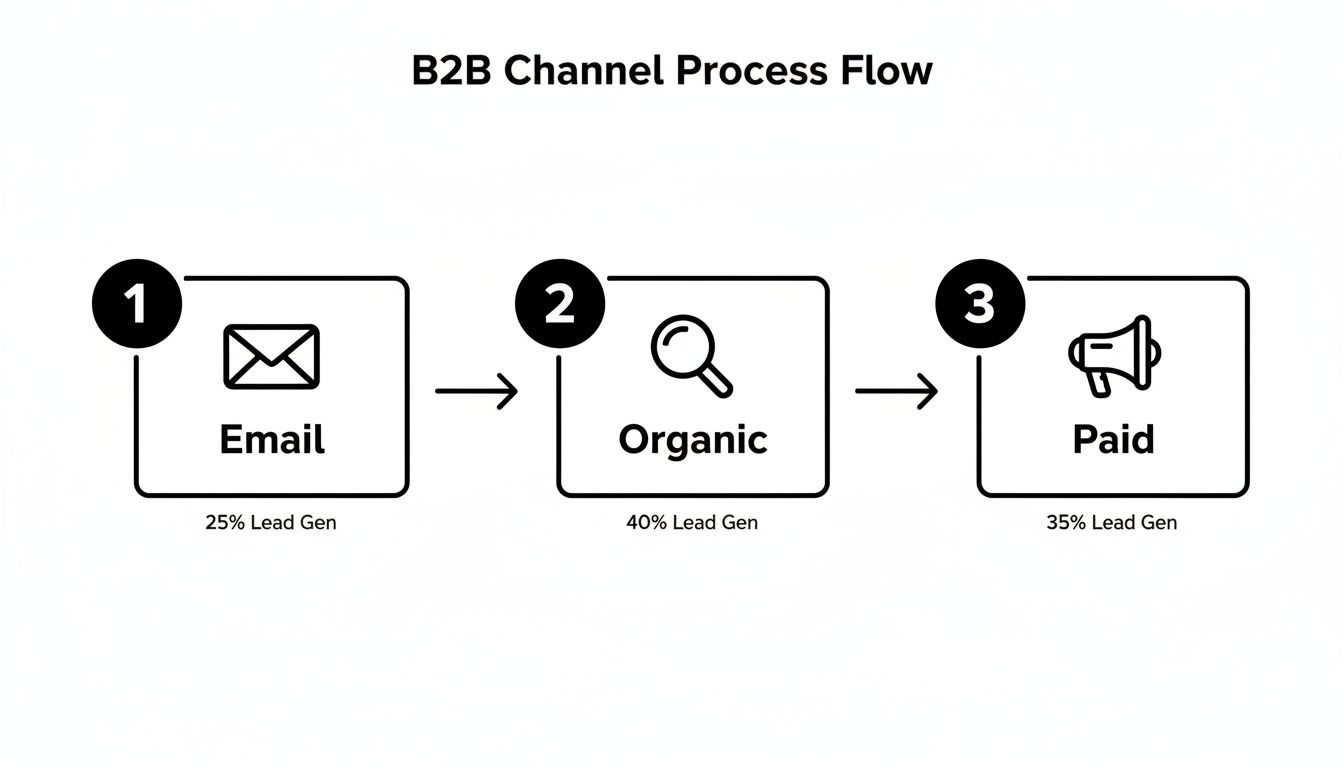

Focus Your Efforts On High-Converting Channels

Every lead is not created equal.

That’s a truth every SDR leader learns the hard way. Pouring resources into channels that pump up your traffic numbers but deliver low-intent prospects is a fast track to a burnt-out SDR team and a pipeline full of noise. The real key to increasing website conversions that actually close is a surgical focus on the channels that bring you people ready to talk business.

Think about it. Not all traffic is a signal of buying intent. Someone who clicks a paid ad for "sales dialer pricing" is in a completely different headspace than someone who lands on a blog post about "cold calling tips" from a Google search. One is actively shopping for a solution; the other is still in the early research phase. Arming your SDRs means knowing this difference and aligning your entire strategy around it.

Comparing High-Intent B2B Channels

For most B2B tech companies I've worked with, three channels consistently rise to the top for generating real, valuable conversions that SDRs love: paid search, organic search, and targeted email campaigns. Each one requires a completely different playbook because the user's context and expectations are poles apart. Get this wrong, and you're just lighting your ad budget on fire and flooding your SDRs with unqualified leads.

A visitor from a paid ad expects an immediate, relevant answer. They clicked on a promise, and your landing page better deliver on it—instantly. Contrast that with a visitor from organic search, who is often looking for an expert to solve their problem. Your job there is to educate and build trust before you even think about asking for a conversion.

And to really dial in your strategy across these platforms, you'll eventually need to look at advanced marketing platforms that can unify the customer journey.

The single biggest mistake is treating all website visitors the same. A channel-specific strategy acknowledges the visitor's mindset and tailors the experience to match their intent, dramatically increasing the odds of a meaningful conversion that your SDR team can actually close.

This understanding is also crucial for setting realistic benchmarks and knowing where to double down.

B2B Conversion Rate Benchmarks by Channel

This table compares the average conversion rates for key B2B marketing channels, helping you prioritize efforts and set realistic performance goals. More importantly, it shows what each signal means for your SDRs.

| Channel | Average Conversion Rate | Best For | SDR Action Signal |

|---|---|---|---|

| Paid Search | 3.2% | Capturing immediate, bottom-of-funnel demand. | 🔥 Hot: High-intent keywords signal an active buying cycle. Follow up immediately. |

| Organic Search | 2.7% | Building trust and capturing mid-funnel research intent. | 🌡️ Warm: Solved a problem; now ready for the next step. Nurture with relevant content. |

| Email Marketing | 2.6% | Nurturing known contacts and driving specific actions. | 🌡️ Warm: Engaged with targeted content; shows continued interest. Perfect for personalized outreach. |

| Social Media | 1.9% | Brand awareness and top-of-funnel engagement. | 🧊 Cold: Typically lower intent unless from a direct offer ad. Route to automated nurture sequences. |

While the numbers might seem close on the surface, the quality and intent behind them are vastly different. A 3.2% conversion rate from a "get a demo" paid campaign is infinitely more valuable to an SDR than a 2.7% rate from a "download our guide" organic post. This context is everything.

Paid Search: A Laser-Focused Approach

Paid search is the ultimate high-intent channel. Period. When someone types a specific solution into a search bar, they are waving a flag that says "I am looking to buy." The key to converting this traffic is message matching. The promise you make in your ad copy has to perfectly align with the headline, content, and call-to-action on the landing page. No exceptions.

- The Landing Page: This isn’t a page on your main website; it’s a direct response to the ad, stripped of all distractions. Kill the main navigation, ditch the footer links—anything that could pull the visitor away from the one thing you want them to do.

- The Offer: It must be compelling and directly tied to the search query. If your ad says "custom demo," the page is built around booking that demo, not downloading a generic whitepaper.

- SDR Action Signal: A conversion from a high-intent paid search campaign is a five-alarm fire. This lead should be routed to an SDR immediately, with the full context of the search term and ad they engaged with. This is the definition of a hot lead.

Organic Search: The Trust-Building Engine

Organic search traffic is a different beast. It often has a much broader range of intent, from top-of-funnel research ("what is sales enablement") to bottom-of-funnel evaluation ("marketbetter.ai vs competitor"). Your goal here is to answer the user's question so damn well that you become their trusted authority, naturally guiding them toward a conversion that an SDR can act on.

Unlike a paid landing page, organic content needs to provide deep value upfront. This is where you solve their problem with comprehensive blog posts, tactical guides, or free tools. The conversion point should feel like a logical next step, not a hard sell. For example, a post on "How to Improve SDR Productivity" could lead to a CTA for a free trial of a tool like marketbetter.ai that automates those exact tasks. It just makes sense, and it gives the SDR a perfect, relevant conversation starter.

Targeted Email Campaigns: The Nurturing Path

Email gives you a direct line to a known audience, making it an incredibly powerful channel for nurturing leads and driving specific actions. Here, context is everything. An email to a list of webinar attendees should have a wildly different CTA than one sent to prospects who abandoned your pricing page.

The secret to high-converting emails is segmentation and personalization. Generic email blasts get generic results. By tailoring your message and offer to a specific segment's past behavior, you create a relevant, compelling reason for them to click through and convert—handing your SDRs a pre-warmed lead who has already shown they're paying attention.

Translate Website Signals into SDR Actions

This is where the rubber meets the road. All that hard work on your channels, messaging, and building trust culminates here, turning website traffic into actual pipeline for your SDR team.

Think about it. A prospect downloading a whitepaper is a decent signal. But what about a prospect from one of your target accounts hitting your pricing page three times in a week? That’s not a signal; it's a buying flare, screaming for your team’s immediate attention.

The next critical move is to build a seamless, automated workflow that takes these high-intent moments and turns them directly into prioritized tasks inside your SDR's CRM. This is the bridge that connects marketing insight to sales action, ensuring no hot lead ever goes cold.

No matter how prospects find you—email, organic search, or paid ads—they all land in the same funnel. Your job is to make sure their behavior gets interpreted and acted on, fast.

The Old Way vs. The Intent-Driven Workflow

For decades, the SDR workflow was just plain broken. It was all manual lists, guesswork, and brute force. Reps got a spreadsheet from a webinar and started dialing for dollars, completely blind to who was actually interested right now. They spent more time figuring out who to call than having valuable conversations.

Contrast that with a modern, intent-driven approach. Instead of a static list, SDRs get a dynamic queue of tasks prioritized by real-time buyer behavior. The system does the heavy lifting, serving up the next best action with all the context they need to have a meaningful conversation.

The goal is to eliminate the question, "What should I do next?" from your SDR's vocabulary. Their inbox should tell them exactly who to contact, why to contact them, and what to say.

This shift moves your team from a reactive, volume-based model to a proactive, precision-based one. The result? Fewer wasted calls, more meaningful conversations, and a huge boost in both productivity and morale.

Configuring Your System for Actionable Signals

So, how do you make this happen? You set up your system to listen for specific website signals and automatically trigger tasks in your CRM. This isn't about tracking every single page view. It’s about zeroing in on the handful of digital behaviors that scream "buying intent."

Here are a few high-impact triggers you should set up immediately to empower your SDR team:

- Pricing Page Visits: This is one of the strongest buying signals you can get. Set a trigger to create a high-priority task if a known contact from an ICP account visits this page more than once in a 7-day period.

- Key Content Downloads: Not all content is created equal. A "Beginner's Guide" download is top-of-funnel noise. An "Implementation Guide" or a "Competitor Comparison" PDF, on the other hand, signals someone much further down the rabbit hole. Flag these for immediate SDR follow-up.

- Demo or 'How It Works' Video Views: Someone watching a detailed product video is doing serious research. If a prospect watches more than 75% of a key product video, it’s a clear sign they’re actively evaluating you. This is a perfect reason for an SDR to reach out.

Once you configure these triggers, the magic happens. A prospect’s action on your site instantly creates a task in Salesforce or HubSpot. That task needs to include the prospect's name, company, the specific action they took (e.g., "Visited pricing page 3 times"), and a direct link to their record.

From Task Creation to Flawless Execution

Creating the task is only half the battle. To really empower your SDRs, that task needs to be a launchpad for immediate action, not just another notification. This is where tools like marketbetter.ai change the game.

The task doesn’t just report what happened; it tees up the next step. An integrated AI can analyze the signal (like a pricing page visit from a VP of Sales) and instantly generate a context-rich email draft. The SDR opens the task, reviews a sharp, relevant email, and hits send—all within seconds, and without ever leaving their CRM.

Let’s be honest about the two different worlds SDRs can live in. One is a frustrating grind; the other is a high-performance engine.

Manual vs Automated SDR Workflow Comparison

The table below breaks down the day-to-day reality of a traditional, manual process versus a modern, signal-driven workflow. The difference isn't just about efficiency; it's about effectiveness and SDR happiness.

| SDR Task | Traditional Manual Process (The Old Way) | Automated Workflow (The MarketBetter Way) |

|---|---|---|

| Prioritization | SDR sorts through a messy list of 100+ "leads," guessing who is most important based on title alone. Wastes hours on low-intent contacts. | System auto-prioritizes the top 5 tasks based on intent signals and account fit. SDRs only work on hot leads. |

| Research | Rep opens 10 browser tabs to research the prospect and their company from scratch, looking for any hook. | The CRM task includes key context like job title, recent company news, and the specific website engagement that triggered the alert. |

| Outreach | SDR copies and pastes a generic template, trying to customize it on the fly. Sounds robotic and gets ignored. | AI generates a personalized email draft based on the specific intent signal and persona. Outreach is hyper-relevant and effective. |

| Logging | Rep forgets to log the call or email, creating a data gap for management and losing valuable context. | Every email and call is logged automatically to the correct Salesforce or HubSpot record. Nothing falls through the cracks. |

Moving to an automated, signal-driven workflow means you’re stripping away the low-value administrative work that can eat up to two-thirds of an SDR's day.

It frees them up to focus exclusively on what they were hired to do: have high-impact conversations with prospects who are actually ready to talk. This is how you stop hoping for pipeline and start building it.

How to Measure Your Conversion Strategy's Impact

Boosting website conversions isn't a project you check off a list. It’s a constant feedback loop, and that loop is powered by cold, hard data.

If you can't measure the impact of your changes, you’re just guessing. For SDR leaders, this is the whole game—proving that your efforts are building real pipeline, not just collecting clicks.

This is about getting brutally honest with your metrics. A jump in form fills is great, but it’s worthless if your SDRs are still complaining about lead quality. The real win is connecting every website action directly to a revenue outcome, so everyone sees exactly what's working and your SDRs trust the leads they receive.

Moving From Vanity Metrics to Revenue KPIs

The old way of measuring success was painfully simple: did the number of "leads" go up? This is exactly how you create a massive disconnect between marketing and sales.

Marketing hits its MQL number, celebrates, and moves on. Meanwhile, the sales team misses quota because those "leads" were just low-intent contacts with no budget and no real interest. Sound familiar?

To fix this, you have to shift the entire conversation from top-of-funnel activity to bottom-of-funnel results. It means tracking the KPIs that your CFO and VP of Sales actually care about—the ones that directly reflect your SDR team's performance.

Stop tracking raw form fills and start tracking metrics that tell a story about pipeline and efficiency. A proper measurement framework gives your sales team confidence that the leads hitting their inbox are actually worth their time.

Here’s how to reframe the conversation from marketing metrics to sales outcomes:

| Old Metric (Vanity) | New Metric (Revenue-Focused) | Why It Matters for SDRs |

|---|---|---|

| Form Fills | MQL-to-SQL Conversion Rate | This is the ultimate test. It shows if marketing is sending leads that your sales team actually accepts and works, proving lead quality. |

| Website Traffic | Pipeline Generated from Website | Directly attributes closed-won and open opportunities to specific conversion points, showing which pages generate real money. |

| Clicks on CTAs | Cost per Qualified Meeting | Measures the true efficiency of your spend in generating real sales conversations for your reps. This is what your budget should be based on. |

| Time on Page | Sales Cycle Length by Source | Reveals if certain channels or offers bring in faster-closing deals, helping you prioritize the most efficient sources. |

This shift changes everything. You’re no longer debating button colors in a meeting. You're discussing how a single landing page tweak generated $250,000 in new pipeline last quarter for your SDR team.

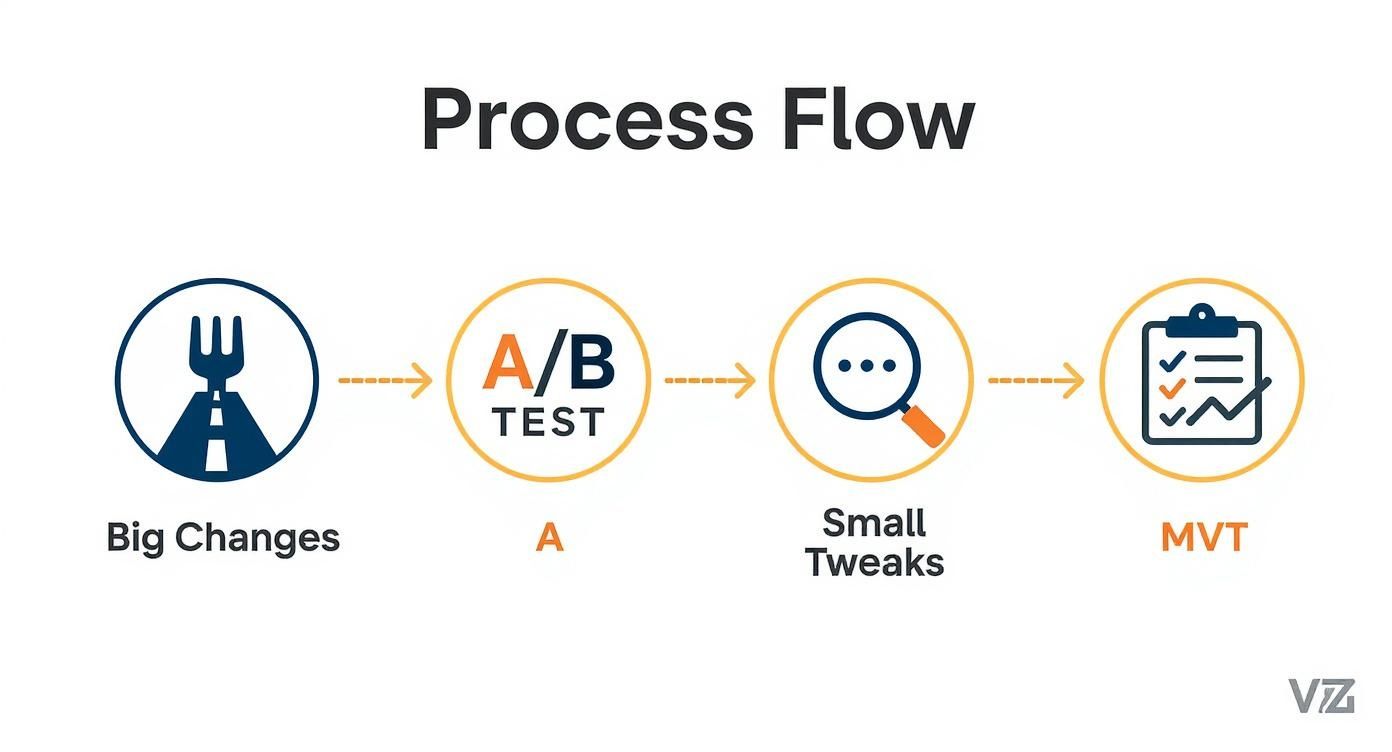

Running Simple, Hypothesis-Driven A/B Tests

Once you’re tracking the right things, you can start making improvements with real confidence. This is where A/B testing comes in, but not the kind you read about in abstract marketing blogs.

It’s not about guessing. It’s about forming a clear, testable hypothesis and letting the data tell you what your audience actually wants.

A good hypothesis isn't a random idea. It’s a sharp, focused statement: "We believe changing X into Y will result in Z." For an SDR leader, the most powerful tests are often the simplest ones that target high-intent actions.

The best A/B tests aren't about flashy redesigns. They focus on reducing friction and clarifying the value of taking the next step. A tiny change in language can have a massive impact on the quality of leads your SDRs receive.

Here’s a real-world scenario any SDR team can run:

- Hypothesis: We believe changing our primary landing page CTA from "Learn More" to "Get a Custom Demo" will increase qualified meetings booked. The new language is more specific and signals higher intent.

- The Test: Use a tool like VWO or the now-retired Google Optimize to show 50% of your traffic the original page and 50% the new version.

- The Measurement: After a few weeks, you don't just count clicks. You track how many qualified meetings each version produced. This is the only metric that matters to your SDRs.

If Version B ("Get a Custom Demo") generates 30% more qualified meetings, you have an undeniable winner. You’ve just made a data-driven decision that directly helps your SDRs hit their number. It’s a world away from arguing about aesthetics in a conference room.

Attributing Pipeline with Clean CRM Data

Here's the catch: none of this works if your CRM data is a mess. Your Salesforce or HubSpot instance has to be the single source of truth for connecting a website conversion to a sales opportunity. This is the final, critical link in the chain.

It requires setting up proper campaign tracking and attribution models from the start. When a prospect fills out a form, that conversion event needs to be stamped onto their contact record in the CRM. From there, you can trace their entire journey—from the very first touchpoint all the way to a closed-won deal.

With this setup, you can finally answer the questions that change the business:

- Which blog post generated the most enterprise-level demos last year?

- Do our LinkedIn Ads convert into higher-value deals than our Google Ads?

- What is the true ROI of our webinar program in terms of actual revenue, not just registrants?

This level of insight is incredibly empowering. It allows you to confidently scale what works and kill what doesn't. You're no longer spreading your budget thin across a dozen initiatives; you're doubling down on the proven winners that feed your sales team high-quality opportunities.

To truly master this, you need to understand the different frameworks available. You can dive deeper into this topic by reading our complete guide on how to measure marketing effectiveness, which breaks down various attribution models. This is how you stop guessing and start building a predictable, scalable revenue engine.

Got Questions? We've Got Answers.

When you're trying to dial in a website that actually fuels your sales team, a few common questions always pop up. Let's tackle them head-on, from the perspective of RevOps pros and SDR leaders who need pipeline, not just clicks.

How Is Website Conversion Different for an SDR Team?

This is the big one. For an SDR team, a "conversion" is a totally different beast than what a traditional marketer might track. A marketer might get excited about 1,000 new newsletter sign-ups. For an SDR, that’s mostly noise that clogs up their workflow.

The real difference comes down to two words: intent and quality. An SDR-focused strategy ignores low-commitment actions. Instead, it zeros in on high-intent signals that tell you a prospect is actively kicking the tires—things like repeatedly visiting your pricing page or watching a full product demo.

- Standard Conversion: Someone downloads a top-of-funnel eBook. This is a low-priority lead that might get a nurture email. An SDR should not be touching this.

- SDR-Focused Conversion: A visitor from a target account requests a custom demo right after binging a case study. This triggers an immediate, high-priority alert for an SDR to jump on within minutes.

Getting this right is the key to increasing website conversions that actually build a healthy pipeline and make your SDR team more efficient and successful.

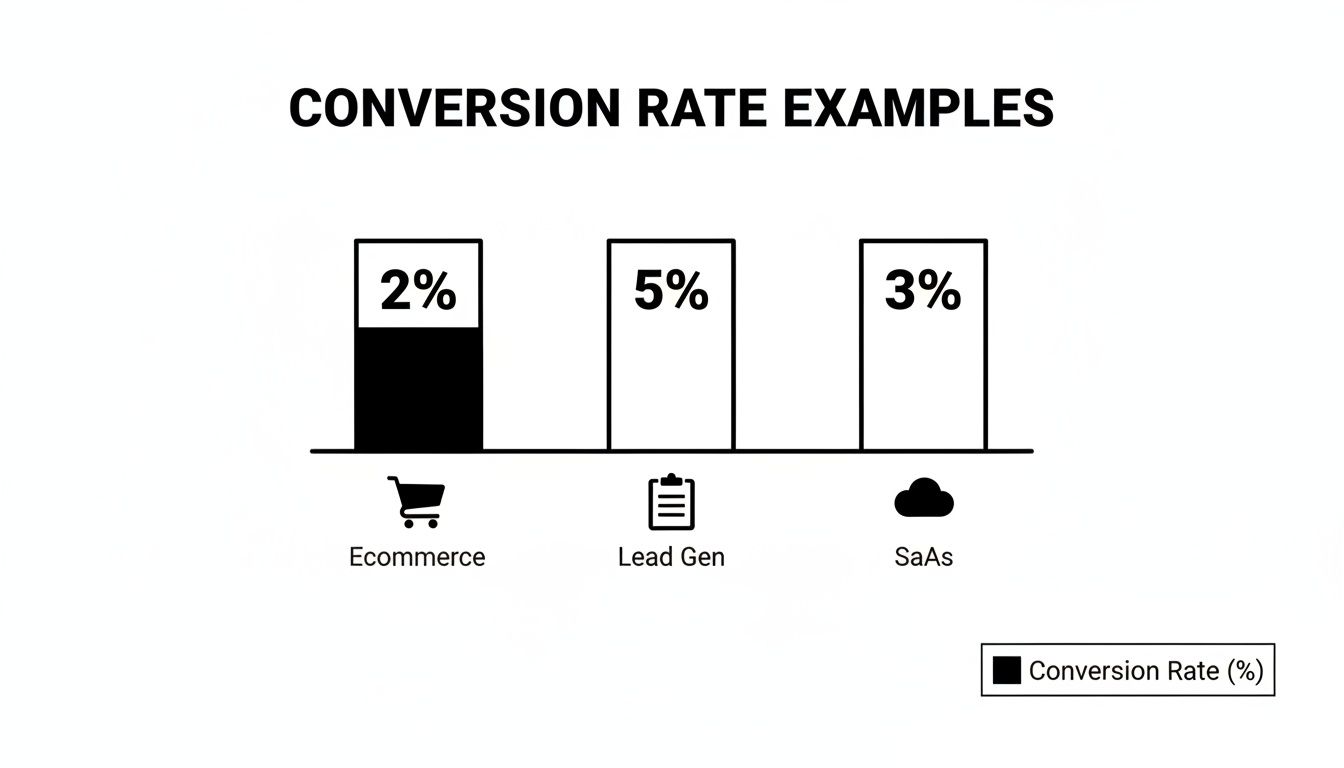

What Is a Good B2B Website Conversion Rate?

You'll see benchmarks floating around the 2% to 5% range, but honestly, that number can be a huge distraction for an SDR leader. A "good" conversion rate is all about context. A 10% conversion rate on a whitepaper download means a lot less than a 1% conversion rate on your "Request a Demo" page.

Instead of chasing some generic industry average, get obsessed with improving the conversion rates on your bottom-of-funnel pages. The goal isn’t just more conversions; it's more qualified sales conversations for your reps.

A much better metric to watch is your MQL-to-SQL conversion rate. If that number is going up, your website is doing its job: finding high-quality leads that your SDRs can actually turn into opportunities.

How Can We Get More High-Intent Conversions?

This feels counterintuitive, but the answer is to add a little bit of strategic friction. You want to make it incredibly easy for serious buyers to raise their hands while making it just a little harder for tire-kickers. It's not about being difficult; it's about qualifying in real-time so your SDRs don't have to.

Think about the difference in these two approaches:

| Action | Low-Intent Approach (More Noise for SDRs) | High-Intent Approach (More Signal for SDRs) |

|---|---|---|

| Gated Content | A generic "Download Now" button for an intro guide, open to anyone. | A specific "Get the ROI Calculator" CTA that requires a business email, filtering out students and unserious prospects. |

| Demo Request | A simple form asking only for "Name" and "Email," creating work for your SDRs to qualify. | A multi-step form that asks about team size and current challenges to pre-qualify them before they ever hit the SDR's queue. |

| Homepage CTA | A vague "Learn More" button that drops them on a features page. | A direct "See How It Works" button that links to an interactive product tour, letting prospects self-educate. |

Each of the high-intent plays acts as a filter. Sure, you might get slightly fewer total form fills, but the quality of each lead you hand over to your SDRs will be exponentially higher, giving them a real shot at starting a meaningful conversation.

Ready to stop generating noise and start creating real pipeline? marketbetter.ai turns buyer signals into prioritized SDR tasks and helps your team execute flawlessly with AI-powered emails and a dialer that lives inside your CRM. See how it works.