AI Contract Review for Sales Teams: How Claude Code Eliminates Legal Bottlenecks [2026]

The average B2B deal loses 3-5 days waiting for legal review.

For high-velocity sales teams, that's not just an inconvenience—it's a competitive disadvantage. While your deal sits in legal's queue, your prospect is talking to competitors who can move faster.

But here's what most sales leaders don't realize: 80% of contract reviews are routine. They're standard terms, boilerplate clauses, and minor customizations that don't actually need a lawyer's attention.

Claude Code changes this equation entirely.

The Hidden Cost of Contract Bottlenecks

Before we dive into the solution, let's quantify the problem:

Time Cost:

- Average legal review time: 3-5 business days

- Rush review requests: 48 hours minimum

- Complex deals: 2-3 weeks with revisions

Revenue Impact:

- 23% of deals stall during contract review (Gartner)

- 15% of prospects go dark while waiting

- Average deal delay costs $1,200-$5,000 in opportunity cost

Team Friction:

- Sales blames legal for slow deals

- Legal is overwhelmed with routine requests

- Everyone loses visibility into where things stand

The solution isn't hiring more lawyers. It's automating the 80% that doesn't need human judgment.

How Claude Code Transforms Contract Review

Claude Code's 200K context window means it can analyze an entire contract—including all exhibits, schedules, and amendments—in a single pass. No chunking, no lost context, no missed cross-references.

Here's what that enables:

1. Instant Risk Flagging

Claude Code can scan any contract and flag clauses that deviate from your standard terms:

Analyze this MSA against our standard terms. Flag any clauses that:

1. Impose unlimited liability

2. Include auto-renewal provisions

3. Contain non-standard indemnification language

4. Restrict our ability to use customer logos/case studies

5. Include unusual payment terms (>Net 30)

For each flag, rate severity (Low/Medium/High/Critical) and

suggest standard language that could replace it.

Within seconds, you get a comprehensive risk assessment that would take a paralegal hours.

2. Redline Generation

Instead of waiting for legal to mark up a contract, Claude Code can generate a redlined version with your preferred terms:

The customer sent a contract using their paper. Generate a

redlined version that:

1. Replaces their liability cap with our standard ($1M or 12 months of fees)

2. Changes indemnification to mutual

3. Removes the audit clause or limits to once per year with 30 days notice

4. Adjusts termination for convenience to 30 days written notice

5. Adds our standard data security addendum language

Output as a tracked-changes document with comments explaining each change.

3. Plain English Summaries

Help your sales team understand what they're sending for signature:

Summarize this contract in plain English for a non-legal audience:

1. What we're agreeing to provide

2. What the customer is agreeing to pay

3. Key obligations on both sides

4. Main risks to be aware of

5. Important dates and deadlines

Keep it to one page maximum.

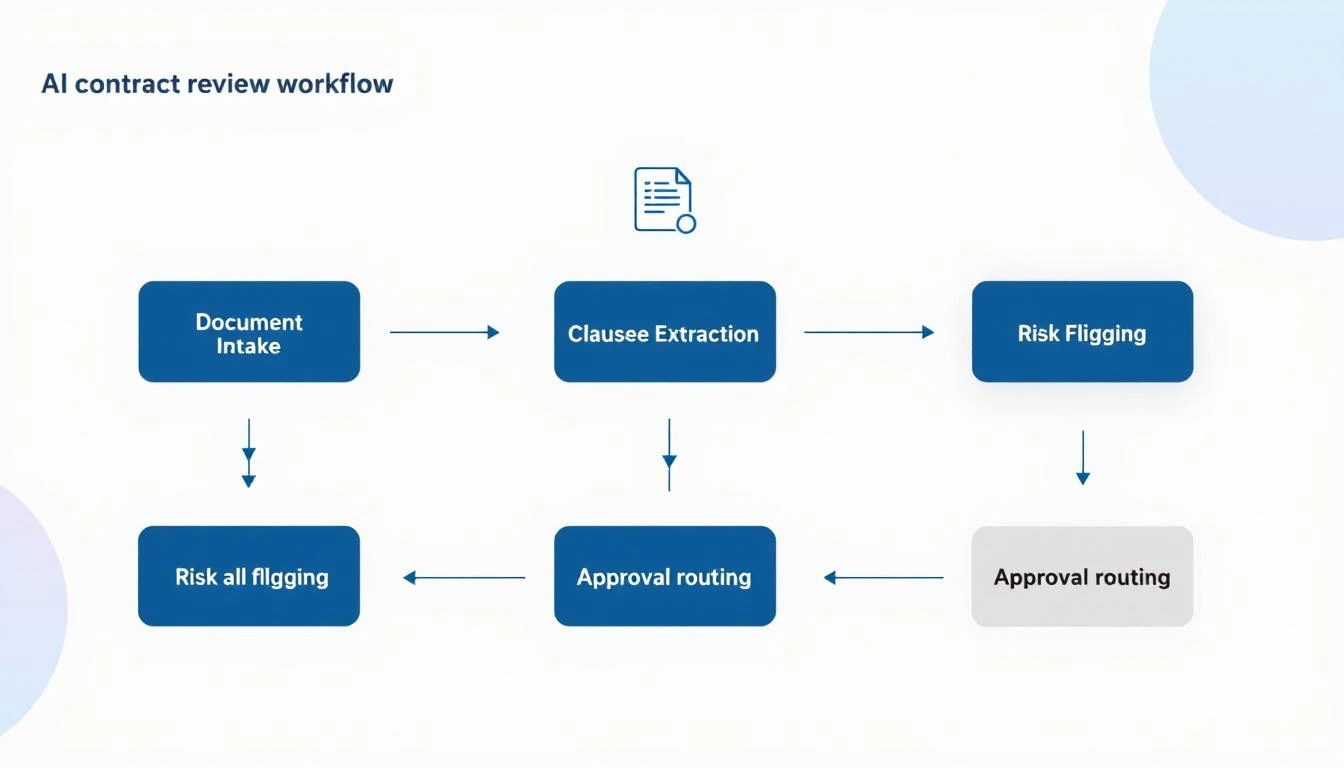

Building Your AI Contract Review Workflow

Here's a practical implementation that any sales ops team can deploy:

Step 1: Create Your Clause Library

Before Claude Code can flag deviations, it needs to know your standards. Build a reference document:

## Standard Contract Terms Reference

### Liability Cap

ACCEPTABLE: Liability limited to 12 months of fees paid

ACCEPTABLE: Liability limited to $1,000,000

REQUIRES REVIEW: Any unlimited liability language

REQUIRES REVIEW: Liability caps below $500,000

### Payment Terms

ACCEPTABLE: Net 30

ACCEPTABLE: Net 45 with approval

REQUIRES REVIEW: Net 60+

REQUIRES REVIEW: Payment upon completion only

### Termination

ACCEPTABLE: 30 days written notice

ACCEPTABLE: Termination for cause with 30-day cure period

REQUIRES REVIEW: No termination for convenience

REQUIRES REVIEW: Penalties for early termination

[Continue for all key clauses...]

Step 2: Build the Review Prompt

You are a contract analyst assistant. Your job is to review

contracts against our standard terms and flag anything that

requires human legal review.

REFERENCE TERMS:

[Paste your clause library here]

CONTRACT TO REVIEW:

[Paste customer contract]

OUTPUT FORMAT:

1. EXECUTIVE SUMMARY (2-3 sentences)

2. RISK SCORE (Green/Yellow/Red)

3. FLAGGED CLAUSES (with page/section reference)

4. RECOMMENDED CHANGES

5. QUESTIONS FOR LEGAL (if any Red flags)

Step 3: Integrate Into Your Workflow

Option A: Manual Review

- Rep uploads contract to Claude Code

- Gets instant analysis

- Decides whether to escalate to legal

Option B: Automated Triage

- Contracts flow through a central inbox

- Claude Code auto-analyzes each one

- Green = auto-approve, Yellow = sales review, Red = legal review

Option C: Full Integration

- Connect to your CLM (Ironclad, DocuSign, PandaDoc)

- Trigger Claude Code analysis on document upload

- Route based on risk score automatically

Real Prompts That Work

Quick Risk Assessment

Review this contract for deal-breaking clauses.

I need to know in 60 seconds if this is signable

as-is or needs changes. Focus on: liability,

indemnification, auto-renewal, and payment terms.

Competitive Analysis

Compare this customer's proposed terms to industry

standard SaaS agreements. Are they asking for

anything unusual? What leverage do we have to

push back?

Negotiation Prep

The customer rejected our standard liability cap

and wants unlimited liability. Generate 3

alternative positions we could offer, ranked

from most to least favorable to us, with talking

points for each.

Post-Signature Obligation Tracking

Extract all obligations, deadlines, and milestones

from this signed contract. Output as a checklist

with responsible party and due date for each item.

The Results You Can Expect

Teams implementing AI-assisted contract review typically see:

| Metric | Before | After | Improvement |

|---|---|---|---|

| Average review time | 3-5 days | 4-8 hours | 80% faster |

| Legal escalation rate | 100% | 20-30% | 70% reduction |

| Deals stalled in legal | 23% | 8% | 65% improvement |

| Contract errors caught | 60% | 95% | 35% more |

The key insight: you're not replacing legal. You're letting them focus on the 20% of contracts that actually need their expertise.

Common Objections (And How to Handle Them)

"Legal will never approve this." Start with low-risk contracts (renewals, standard deals). Prove the accuracy before expanding scope. Position it as "triage," not "replacement."

"What about confidentiality?" Claude Code processes data in-session without training on your inputs. Use enterprise agreements with appropriate data handling terms.

"Our contracts are too complex." The 200K context window handles even the most complex agreements. Start with the standard sections and expand.

"What if it misses something?" Build a human review step for flagged items. The AI catches the obvious issues; humans verify the edge cases.

Getting Started Today

-

Audit your current process - How long do contracts actually take? Where are the bottlenecks?

-

Build your clause library - Document your standard terms and acceptable variations

-

Test on historical deals - Run Claude Code on 10 signed contracts and compare to what legal actually flagged

-

Start with renewals - Low-risk, high-volume, perfect for automation

-

Measure and expand - Track time savings, error rates, and legal escalations

The Competitive Advantage

While your competitors are waiting for legal to review their fifteenth standard MSA of the week, you're sending signed contracts back the same day.

That's not just efficiency—it's a competitive moat.

The deals you close faster are deals your competitors never get a chance to compete for.

Ready to eliminate your contract bottleneck? Book a demo to see how MarketBetter helps sales teams accelerate every stage of the deal cycle.

Related reading: