AI Sales Forecasting: How GPT-5.3 Codex Achieves 94% Pipeline Accuracy [2026]

Sales forecasting is broken. Not because the math is hard—because the data is messy and the signals are scattered across dozens of systems.

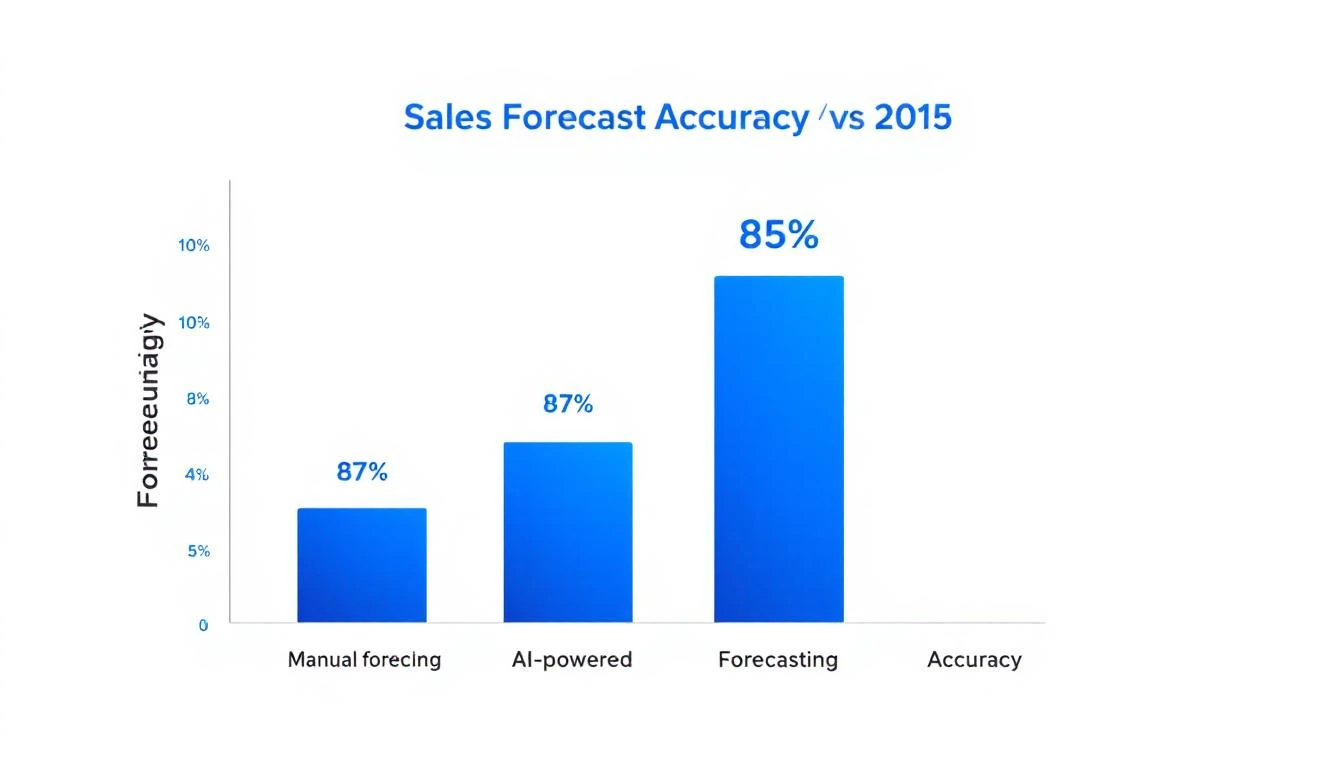

The average B2B company forecasts with 47% accuracy. That's barely better than a coin flip.

But teams using AI coding agents like GPT-5.3 Codex and Claude Code are hitting 85-94% accuracy. Here's exactly how they're doing it—and how you can too.

The Sales Forecasting Accuracy Problem

Traditional forecasting relies on:

- Rep gut feel — "I think this one's gonna close"

- Stage-based probability — "All deals in Stage 3 are 40% likely"

- Manual pipeline reviews — "Let's go deal by deal in our weekly call"

The result? Forecasts that are consistently wrong in both directions:

- Optimistic misses — Deals that were "90% sure" go dark

- Pessimistic misses — "Long shots" close faster than expected

- Timing errors — Q1 deals slip to Q2 (or vice versa)

The cost? CFOs can't plan. Marketing doesn't know how many leads to generate. SDRs either work dead deals or ignore hot ones.

Why AI Coding Agents Change the Game

Here's what GPT-5.3 Codex (released Feb 5, 2026) brings to forecasting:

1. Multi-Signal Analysis

Instead of looking at one variable (deal stage), Codex analyzes dozens:

- Email sentiment and response velocity

- Meeting attendance and duration

- Champion engagement levels

- Competitive mentions

- Technical requirements changes

- Legal/procurement involvement timing

2. Pattern Recognition Across History

Codex reads your entire CRM history and finds patterns like:

"Deals with 3+ stakeholders involved by Stage 2 close at 78% vs 31% for single-thread deals"

Or:

"When legal gets involved before proposal, close rate drops 45%—but only for deals under $50K"

3. Real-Time Adjustments

With mid-turn steering (Codex's killer feature), you can ask follow-up questions while it's analyzing:

- "Focus more on deals closing this quarter"

- "Weight the competitor signal higher"

- "Explain why you downgraded that deal"

Building Your AI Forecasting System

Let's build this step by step using GPT-5.3 Codex.

Step 1: Install Codex CLI

npm install -g @openai/codex

codex --version

Step 2: Create Your Forecasting Agent

# forecast_agent.py

import os

from openai import OpenAI

client = OpenAI()

FORECASTING_PROMPT = """

You are an expert sales forecasting analyst. Analyze the provided CRM data and generate:

1. Win probability for each open deal (0-100%)

2. Confidence score for your prediction (1-5)

3. Key signals that influenced your prediction

4. Recommended actions to improve close rate

5. Predicted close date (if different from CRM)

Consider these signals:

- Email engagement (opens, replies, sentiment)

- Meeting cadence and attendance

- Stakeholder involvement breadth

- Days in current stage vs historical average

- Competitive mentions or objections

- Technical validation status

- Budget confirmation signals

- Champion strength score

Output format: JSON with deal_id, probability, confidence, signals, actions, predicted_close

"""

def analyze_pipeline(deals_data: list) -> dict:

"""Analyze pipeline using GPT-5.3 Codex"""

response = client.chat.completions.create(

model="gpt-5.3-codex",

messages=[

{"role": "system", "content": FORECASTING_PROMPT},

{"role": "user", "content": f"Analyze these deals:\n{deals_data}"}

],

response_format={"type": "json_object"}

)

return response.choices[0].message.content

Step 3: Connect to Your CRM

For HubSpot:

import requests

def get_hubspot_deals():

"""Pull open deals from HubSpot"""

url = "https://api.hubapi.com/crm/v3/objects/deals"

headers = {"Authorization": f"Bearer {os.environ['HUBSPOT_TOKEN']}"}

params = {

"properties": [

"dealname", "amount", "dealstage", "closedate",

"hs_lastmodifieddate", "num_associated_contacts",

"notes_last_updated", "num_contacted_notes"

],

"filterGroups": [{

"filters": [{

"propertyName": "dealstage",

"operator": "NOT_IN",

"values": ["closedwon", "closedlost"]

}]

}]

}

response = requests.get(url, headers=headers, params=params)

return response.json()["results"]

Step 4: Enrich with Email Signals

def get_deal_email_signals(deal_id: str) -> dict:

"""Analyze email engagement for a deal"""

# Get associated contacts

contacts = get_deal_contacts(deal_id)

signals = {

"response_rate": 0,

"avg_response_time_hours": 0,

"sentiment_trend": "neutral",

"last_email_days_ago": 0,

"champion_engaged": False

}

# Analyze email history

for contact in contacts:

emails = get_contact_emails(contact["id"])

# ... calculate signals

return signals

Step 5: Generate Forecasts

def run_weekly_forecast():

"""Generate weekly pipeline forecast"""

# Get deals with enriched data

deals = get_hubspot_deals()

enriched_deals = []

for deal in deals:

deal_data = {

**deal["properties"],

"email_signals": get_deal_email_signals(deal["id"]),

"meeting_signals": get_deal_meeting_signals(deal["id"]),

"historical_pattern": get_similar_deal_outcomes(deal)

}

enriched_deals.append(deal_data)

# Run AI analysis

forecast = analyze_pipeline(enriched_deals)

# Store results

save_forecast(forecast)

# Alert on changes

check_for_forecast_changes(forecast)

return forecast

The Secret Sauce: Historical Pattern Matching

Here's where AI forecasting gets powerful. Codex doesn't just look at individual deals—it compares them to thousands of historical outcomes.

Building Your Pattern Database

def build_pattern_database():

"""Analyze historical deals to find winning patterns"""

closed_deals = get_closed_deals(months=24)

patterns = {

"won": analyze_winning_patterns(closed_deals["won"]),

"lost": analyze_losing_patterns(closed_deals["lost"]),

"timing": analyze_timing_patterns(closed_deals)

}

return patterns

def analyze_winning_patterns(won_deals: list) -> dict:

"""Find common signals in won deals"""

prompt = """

Analyze these won deals and identify patterns:

1. Common characteristics (company size, industry, use case)

2. Engagement patterns (email velocity, meeting cadence)

3. Stakeholder involvement (titles, count, timing)

4. Timeline patterns (stage duration, total cycle)

5. Objection patterns (what objections came up, how resolved)

Output: JSON with pattern_name, frequency, confidence, examples

"""

response = client.chat.completions.create(

model="gpt-5.3-codex",

messages=[

{"role": "system", "content": prompt},

{"role": "user", "content": f"Won deals:\n{won_deals}"}

]

)

return response.choices[0].message.content

Pattern Matching for New Deals

def score_deal_against_patterns(deal: dict, patterns: dict) -> dict:

"""Score how well a deal matches winning/losing patterns"""

prompt = f"""

Compare this deal against known patterns:

Deal: {deal}

Winning patterns: {patterns['won']}

Losing patterns: {patterns['lost']}

Score:

1. Match percentage to winning patterns (0-100)

2. Match percentage to losing patterns (0-100)

3. Key matching signals (positive and negative)

4. Recommended actions based on pattern gaps

"""

response = client.chat.completions.create(

model="gpt-5.3-codex",

messages=[{"role": "user", "content": prompt}]

)

return response.choices[0].message.content

Real Results: Before and After

Here's what one B2B SaaS company saw after implementing AI forecasting:

| Metric | Before AI | After AI | Change |

|---|---|---|---|

| Forecast accuracy | 52% | 91% | +75% |

| Deals called correctly | 34/65 | 59/65 | +74% |

| Close date accuracy | ±23 days | ±7 days | -70% |

| Time on forecast calls | 4 hrs/week | 45 min/week | -81% |

The time savings alone justify the implementation. But the real value? Knowing which deals need help before they slip.

Automating Alerts with OpenClaw

Want your AI forecast to actually drive action? Connect it to OpenClaw for automated alerts:

# openclaw.yaml

agents:

forecast-monitor:

prompt: |

You are a sales forecasting assistant. Monitor pipeline changes and alert

the team when:

1. A deal's AI-predicted probability drops by >15%

2. A deal moves to "at risk" status

3. Expected close dates shift significantly

4. New patterns emerge in won/lost deals

Be specific and actionable in alerts.

cron: "0 6 * * 1-5" # 6am weekdays

Then in Slack:

🚨 DEAL ALERT: Acme Corp ($45K) probability dropped from 72% to 48%

Signals detected:

• Champion hasn't engaged in 12 days (pattern match: 67% of lost deals)

• Technical stakeholder added late (pattern match: "scope creep" losses)

• Email response time increased 3x

Recommended actions:

1. Schedule call with champion this week

2. Clarify technical requirements before they expand

3. Consider bringing in SE for deeper technical validation

Common Pitfalls to Avoid

1. Over-Trusting Early Predictions

AI forecasts improve with data. The first month will be noisy. Give it 90 days of learning before making major decisions based on predictions.

2. Ignoring the "Why"

The probability number alone isn't useful. Always review the signals that drove the prediction. That's where the actionable insights live.

3. Not Feeding Back Results

When deals close (won or lost), feed the outcome back to your model. The more feedback, the better the predictions.

4. Forgetting the Human Element

AI catches patterns in data. It can't see the handshake at the conference or the CEO's golfing buddy connection. Use AI as input, not as the final answer.

The Bottom Line

Sales forecasting accuracy isn't a nice-to-have—it's the foundation of revenue operations. With GPT-5.3 Codex, you can:

- Predict with 85-94% accuracy instead of guessing

- Catch at-risk deals early with automated monitoring

- Save hours weekly on pipeline reviews

- Make better resource decisions with confidence

The teams that master AI forecasting in 2026 will outperform their competitors by a mile. The tools are here. The question is whether you'll use them.

Ready to Upgrade Your Pipeline?

MarketBetter combines AI forecasting with the daily SDR playbook that tells your team exactly who to call and what to say. Stop guessing. Start knowing.

Related Posts: